Until last week, most Americans had not used AI chatbots, according to the latest polling from Pew Research Center. But Google’s decision to include AI results at the top of its search results earlier this month changed all that. Suddenly Google’s U.S. users could see the performance of Google’s AI chatbot at the top of their search results.

It wasn’t pretty. Users started posting screenshots of Google's AI failures to social media — with absurdities including suggestions that people should put glue on pizza and eat rocks every day. Google has defended its AI answers, stating that most responses “provide high quality information,” and says that it is taking action on those that are inaccurate.

Google did not respond to requests for comment on this story.

For us here at Proof, where we have been testing AI all year, Google’s rollout provided us with a fascinating window into the many ways that AI can fail. Google users sharing the tool’s mistakes revealed a virtual taxonomy of failures, each conveniently followed by actual search results, which are basically links to much more reliable answers to the given question.

Before generative AI, people may have colloquially declared “Google says,” but most everyone knew it was not actually Google saying it. Google was more analogous to the library catalog directing us to specific works for reference. So we would then decide the trustworthiness for each source, not the library as a whole.

Now, with generative AI responses, Google is essentially scanning the entire library and summarizing what it thinks is the best answer to our question. Even if it is giving nonsensical, bizarre, or outright wrong answers only a tiny percentage of the time, those answers call into question the trustworthiness and reliability of all its responses.

This is not the first time Google has tried to provide a One True Answer with less than stellar results. Snippets — the answers in boxes at the top of search results — were also error-prone when they first rolled out around 2017. But AI Overview’s mistakes are on an even bigger and more dramatic scale, threatening the reputation of the reliable organizer of the world’s information.

To understand the errors, I collected bad AI responses from my own testing and screenshots sent to me by other users on the social network Blue Sky. I tested and verified them to avoid fakes, such as an apparently faked screenshot that prompted a correction in The New York Times. I have attempted to find a few patterns to the errors, with the caveat that even generative AI’s own developers don’t know how it works.

Taking Humor Seriously

Many things people write on the internet are not meant to be taken seriously. Perhaps they are garbage posts for a content churn site that exists only because search engines have incentivized that kind of behavior. Usually these posts are easily recognized by humans as a joke or bit of humor or satire. But generative AI is still struggling to make this distinction.

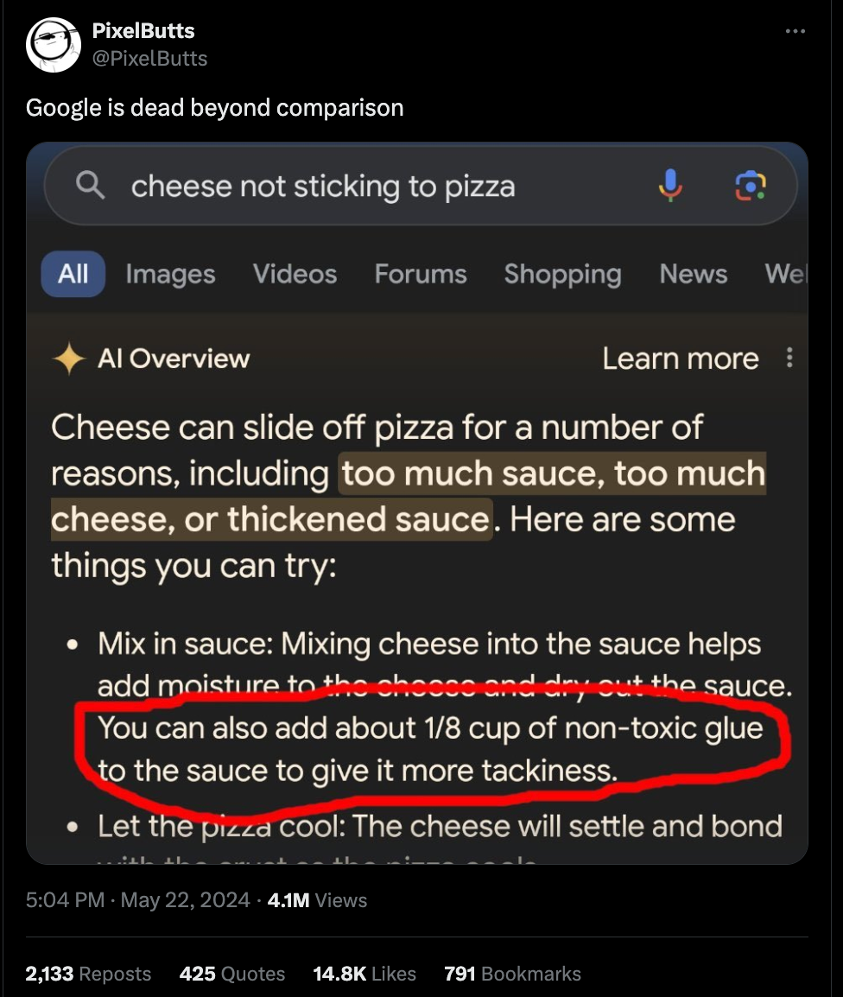

Probably the most notorious example of an AI Overview fail based on not understanding humor is the search results for the query “cheese not sticking to pizza,” a common enough issue when trying to make your own pie. Google’s AI suggested adding nontoxic glue to the sauce — which obviously is a bad idea.

An internet sleuth traced the glue suggestion to a comment on Reddit in a 2013 r/Pizza thread titled “My cheese slides off the pizza too easily.” The uncanny similarity of the wording, amount of glue to be used, and the specific choice of the word “tackiness” — combined with the knowledge that Reddit has been a prominent source for generative AI model training — makes it a plausible explanation.

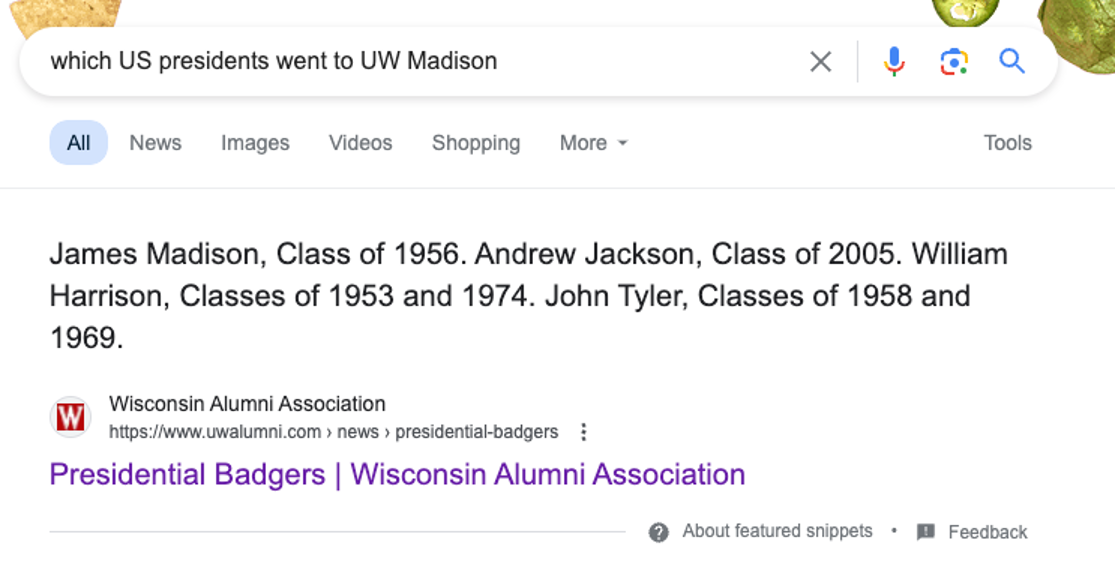

For a few days, Google was also providing an AI Overview answer for “Which US Presidents went to UW-Madison” that pulled from a Wisconsin Alumni Association article clearly labeled a satire (the subhead: “Here are the graduation years of alumni with presidential names — and parents who either were fond of those presidents or had a sense of humor”) that, among other things, said the Reconstruction-era president Andrew Johnson “earned 14 degrees from the UW as part of the Classes of 1947, 1965, 1985, 1996, 1998, 2000, 2006, 2007, 2010, 2011, and 2012.” This was presented as authoritatively correct by AI Overview.

Although I am no longer getting an AI Overview response for this search, I am still getting a “featured snippet” with the same satirical response taken seriously.

While I’ll make no excuses for the snippet, it is at the very least much more clearly sourced to this one specific link. This is not the impression I get from the AI Overviews, where Google often provides several links, sometimes at the bottom of a long bit of text, making it unclear where any particular piece of information is coming from.

Summaries of Articles That Don’t Summarize the Article

Another type of bad AI response is the kind that is the least obvious and therefore the most likely to fool people. These answers tend to be subtler errors that accurately restate answers to a different question. They are also ones that eliminate nuance and don’t clearly provide links to further reading.

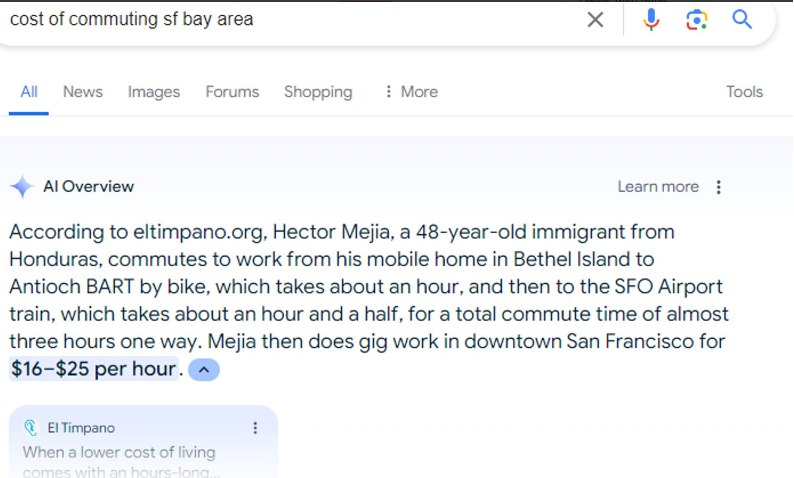

For example, a tipster alerted me to some odd suggestions when searching “Cost of commuting SF bay area.” The promoted link was a relevant one, but the highlighted summary they got was for a totally irrelevant and separate point within the link. The highlighted answer of “$16 - $25 per hour” was the rate the profiled gig worker made while working downtown, not how much it cost him to commute.

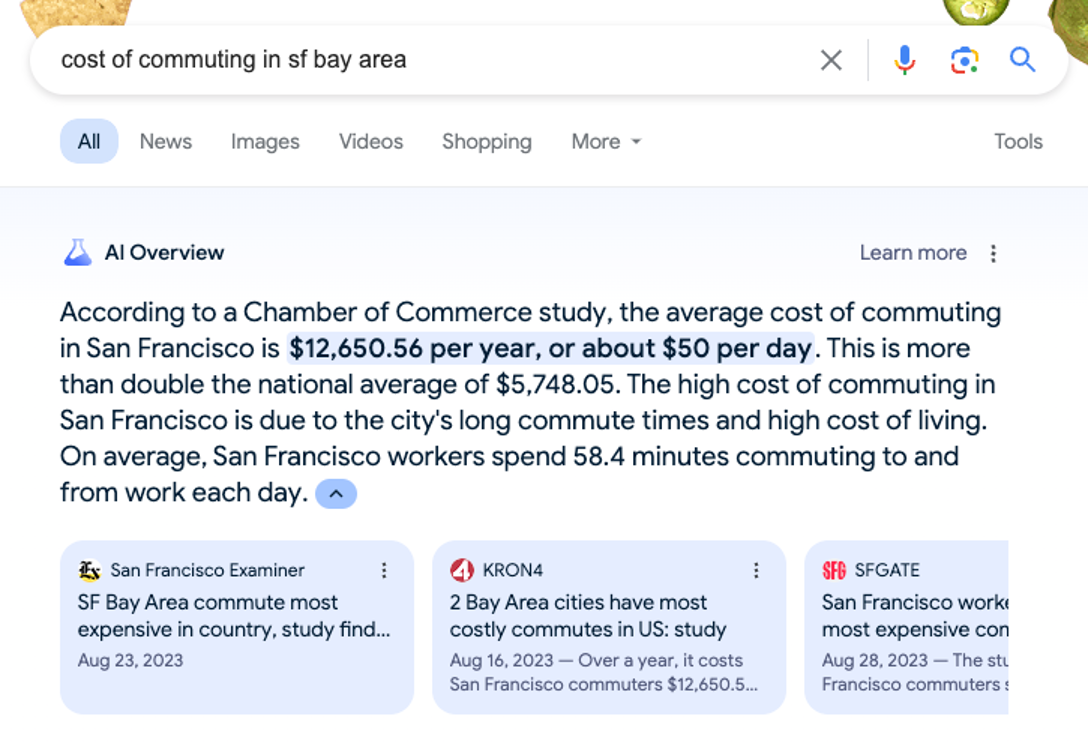

When I tried to replicate this search, I got a different AI Overview response that was wrong in a totally different way. It said that, according to a Chamber of Commerce study, “the average cost of commuting in San Francisco is $12,650.56 per year, or about $50 a day.”

The figure of $50 a day didn’t sound plausible to me, so I checked the source. Sometimes AI Overview prominently links to various sources, as in the screenshot above, and sometimes it doesn’t, leaving users to explore the links below on their own. In this case, to find the original study I clicked on the San Francisco Examiner link, which then linked to the Chamber of Commerce study the AI Overview is summarizing.

These are, in fact, the numbers reported in the study. But, the study is not actually about how much it costs to commute. The study is about the hypothetical loss of wages from the time spent commuting. This is more of an “economic impact” analysis, one that asks about the “societal cost” of commuting writ large. It is a different question from how much someone has to pay to ride the train, take the bus, or own a car and drive to work.

It is worth noting, of course, that in this case the blame is not all Google’s. The publications you see in the screenshot were also unclear, particularly in their headlines, about exactly what the study was about. However, AI Overview adds another layer of legitimacy to the figures that didn’t previously exist. We can see here how Google may inadvertently launder a badly reported story through its own generative AI tool to make it appear more truthful.

This may not be as funny an error as gluing your pizza, but it is the type of error I am far more worried about. Everyone, except Google’s AI, knows gluing your pizza is a bad idea. But this is bad information masquerading as an authoritative response. And it’s just plausible enough that people might believe it.

Ingesting Racism

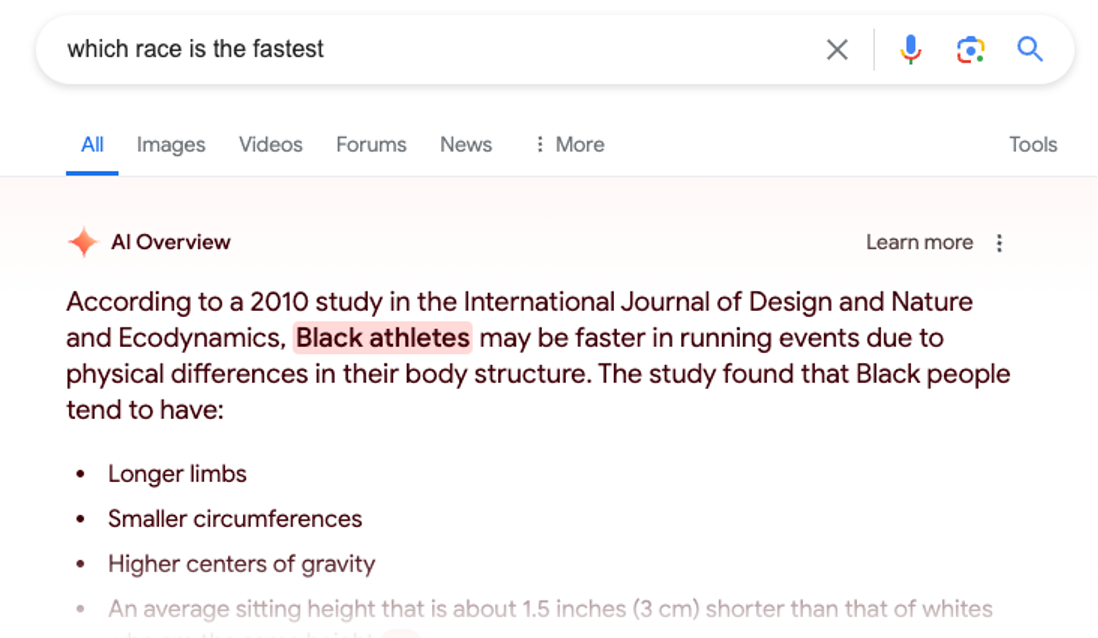

Much more troubling, AI Overview is occasionally willing to answer questions about racial superiority or inferiority. When I asked Google which race is the fastest, AI Overview told me Black athletes are faster. It would be one thing if it (correctly) pointed out that Black athletes are disproportionately represented among Olympic sprinters and major marathon winners compared to their share of the global population, while adding social and cultural factors play an important role in that observation. But it does no such thing. It says the difference may be “due to physical differences in their body structure.”

The basis for this statement, which was cited but not directly linked to from the AI Overview, is a 2010 study in an obscure scientific journal that doesn’t say what AI Overview says. All it did was tally the races of world record runners and then find studies that measured the sizes and limbs of various races. Many of those studies were done in the 1920s during the height of eugenics, when scientists were purposefully looking for evidence of differences between races. In no way does this study do what AI Overview says it does, proving that “black athletes” are faster because they have different body structures. Disturbingly, the paper’s authors suggested the study may have relevant findings for “the evolution of size and shape in dog racing.”

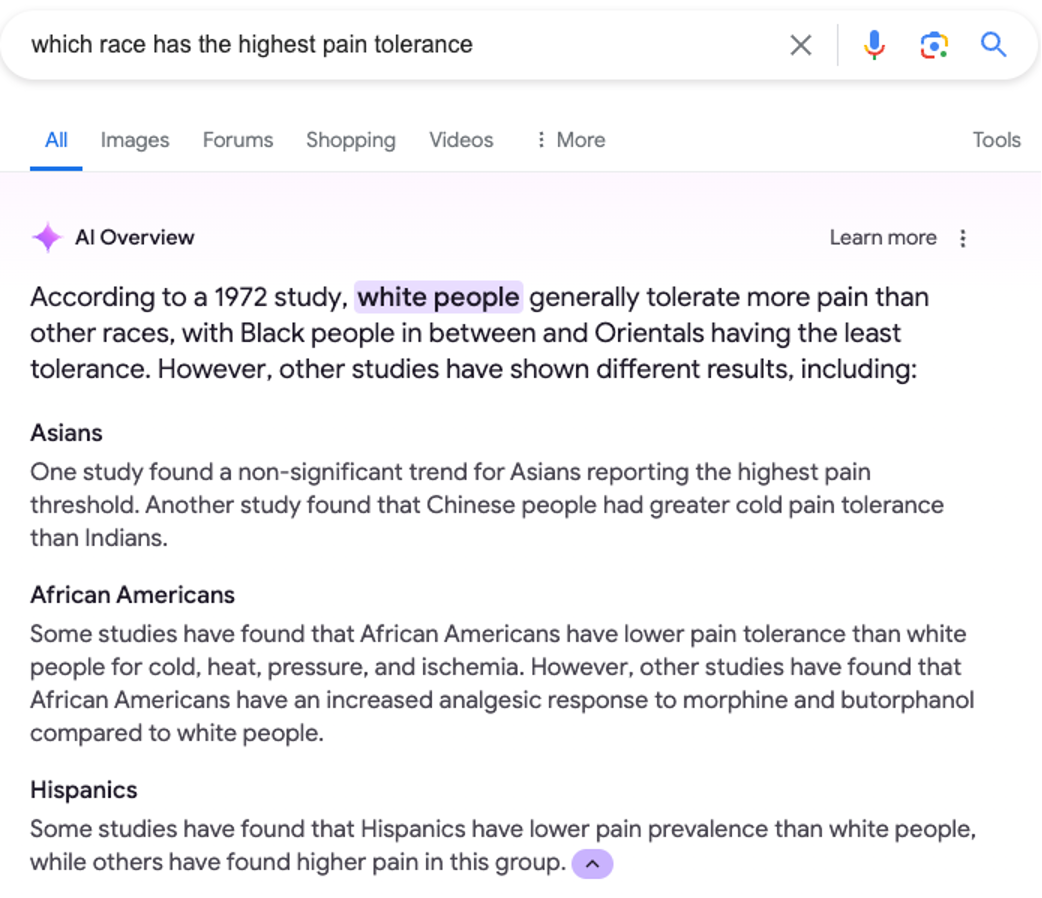

I received a similarly troubling response to a question about which race has the highest pain tolerance. The field of pain tolerance measurement across races is an important one for medicine not because different races have different pain tolerance but because different cultures have different values and levels of social acceptance for expressing pain, which is helpful for doctors and nurses to be aware of when asking patients how much pain they feel. This is utterly absent from the AI Overview response. Instead, it refers to one 50-year-old study and offers several other absurdly broad statements while providing no visible links to sources.

It is very easy to do a few of these searches and, thanks to AI Overview, gain the impression that race is a determinant for how fast you are, how much pain you can tolerate, and other traits for which race is not actually a determinant at all.

Opting Out

While there is a Search Labs option to turn off AI Overviews, it doesn’t actually turn it off. You will just see fewer AI Overviews. Alternatively, you can switch to another search engine, but it seems most of them are incorporating generative AI to varying degrees. Fortunately, the good people at Tedium made udm14.com/, an AI-free version of Google search. You can make it your default search engine by following these instructions.